Use AI to Generate Art

While text-based AI tools like ChatGPT are incredibly powerful, for me, personally, even more fascinating tools are text-based art generators like Midjourney.

You can use AI-generated images from Midjourney to create a children's book, animated AI avatars, or different comics. AI can generate unique and customizable images quickly and efficiently, allowing you to bring your ideas to life in new and exciting ways.

In this article, we’ll look at the different ways you can use Midjourney and other text-to-image AI tools at work.

Creating photorealistic animated avatars entirely using AI

Max is a YouTuber who runs the channel Maximize.

He used the following prompt on Midjourney to turn a picture of himself into an avatar.

Prompt: /imagine [picture's link] male pixar character

And this is what he got:

If you don't want to use your own picture, you can create an entirely artificial photorealistic AI using Midjourney, using a prompt I found on Reddit:

Prompt: /imagine A medium shot of a white woman wearing a T-shirt, captured with a Nikon D850 and a Nikon AF-S NIKKOR 70-200mm f/2.8E FL ED VR lens, lit with high-key lighting to create a soft and ethereal feel, with a shallow depth of field --ar 2:3

This prompt, and other variations like it, produce images like this:

These people shown in the pictures above don't exist. No reference images were used (at least not in the prompt). Midjourney came up with these realistic faces all on its own.

Writing a script for your avatar

The next part involves writing a script for your character that you can use for a voiceover. This can be done with the help of ChatGPT (or you can always write your own).

For this part, Max just used the following prompt:

Prompt: Write me an outro for a YouTube video telling people to watch more and subscribe

ChatGPT created the following script, and then we moved on to the next part of our avatar creation, the animation.

Animating your avatar

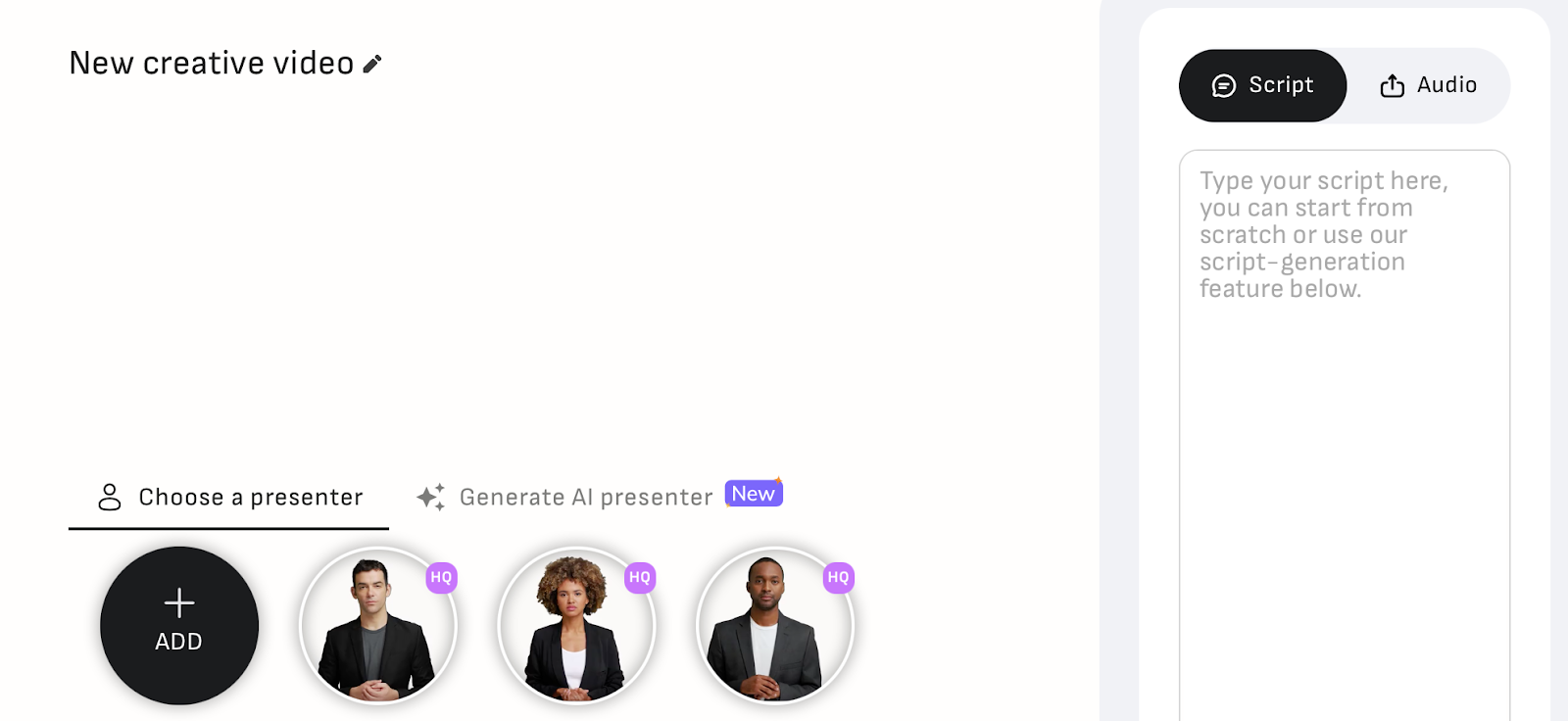

For animation, Max used D-ID. It's an AI tool that maps the facial movements of any image you upload to a script. It automatically generates a voiceover for you too.

To add your avatar, you can just click on the "Add" button and upload a picture of your avatar. Pro-tip: you can also use your real picture.

Next, you paste your script in the box on the right, or you can upload an audio file.

You can also upload a pre-recorded voice to D-ID. It will map the movement of the facial features to the voice. However, using a pre-recorded script significantly reduced the quality of the voice. I suggest letting D-ID generate the voiceover.

Animated avatars allow you to essentially create a life-like person that talks. It was possible to do this before the current AI explosion by creating 3D models, mapping the motion of their facial features for each word, and then adding a voiceover. But as you can probably tell, it took hours. Now, with AI tools, we can get the job done much faster.

Limitations and possible improvements

Sounds robotic - D-ID creates a very robotic-sounding voice, even if you use the options to include emotions. You can use ElevenLabs to create the sound. But remember that D-ID will make the sound quality noticeably worse after creating the video. Thus, I recommend just making do with the robotic voice.

Out of sync - If the robotic voice is a deal-breaker for you, you can also create a script and make a video with D-ID. You can then use the same script and generate a natural-sounding voice through ElevenLabs, and you can combine the two with a movie editor like iMovie. But when I tried doing it, the character's facial movements and the voice were very hard to sync. I spent a couple of hours on it, and I couldn't get it exactly right. So, for people who don't really care about the sound quality, you can just simply generate a voiceover at ElevenLabs and then upload it to D-ID.

America first - In fact, paid plans at ElevenLabs allow you to use your own voice to generate synthetic sounds. All you need to do is upload some voice samples, and they will create a voice that uses your way of pronunciation. But it's not perfect, especially if you don’t have an American accents. The voice comes out sounding significantly different from how you actually sound.

Regardless of these limitations, there's no denying that you can creatively use these AI avatars for different videos and entertainment purposes, from creating faceless YouTube videos to delivering the news, like India's first AI avatar news anchor.

If you would like to see Max's entire workflow, you can view it in this video.

Using AI to write a children's book

The Zinny Studio (TZS) is an up-and-coming YouTube channel that explained how the process of creating, publishing, and selling children's books is now accessible to everyone.

With a simple prompt, TZS used ChatGPT to get their book written.

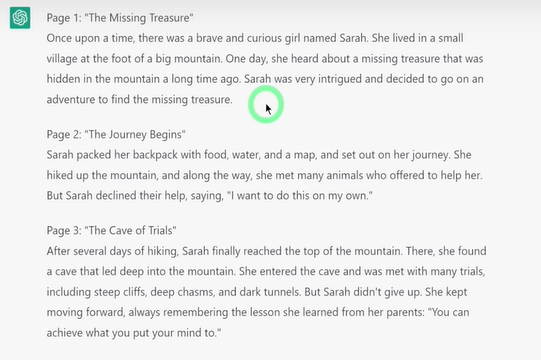

Prompt: Write me a children's story about a girl going on an adventure to find a missing treasure. Lesson: "You can achieve what you put your mind on". Make it 6 pages and title each page. Make it mind blowing and intriguing.

The prompt produced the following story:

Creating pictures for each page

Next, they asked ChatGPT to describe each page using adjectives and verbs because that will help create a visual description of each page. The description is then used to generate the images with Midjourney.

Prompt: Describe each page in adjectives and verbs so I can describe to an illustrator

And with that single prompt, ChatGPT creates visual descriptions of each page because it remembers the outputs from before:

But these aren't descriptive prompts that can be used with Midjourney, so they fixed that with another prompt:

Prompt: Write it again but include what person should be in the picture

And with that prompt, you will get a descriptive text that can be used directly with Midjourney:

Generating images for the book with Midjourney

Next, we will use the descriptions we generated earlier as prompts for Midjourney.

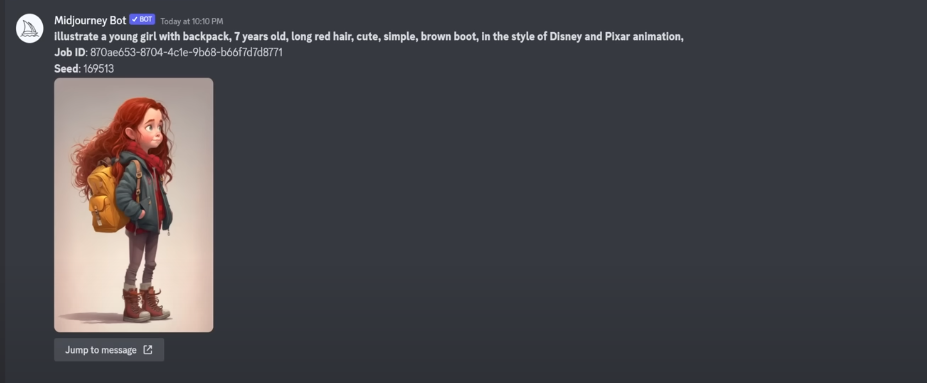

First, we need to create our main character. For this video, TZS used the following prompt:

Prompt: /imagine illustrate a young girl with backpack, 7 years old, long red hair, cute, simple, brown boot, in the style of Disney and Pixar animation,

And Midjourney will create the image for us. We can then select the one we want and keep on repeating the process to generate consistent characters, as described above by Christian in his video.

Once we have our character ready (and fairly consistent), we can use its link and seed number to create it in different scenes based on the descriptions generated by ChatGPT. The way TSZ approached it is as follows:

Prompt: /imagine [link to the character's image] [description generated by ChatGPT or variations of it] --seed [seed number for the image]

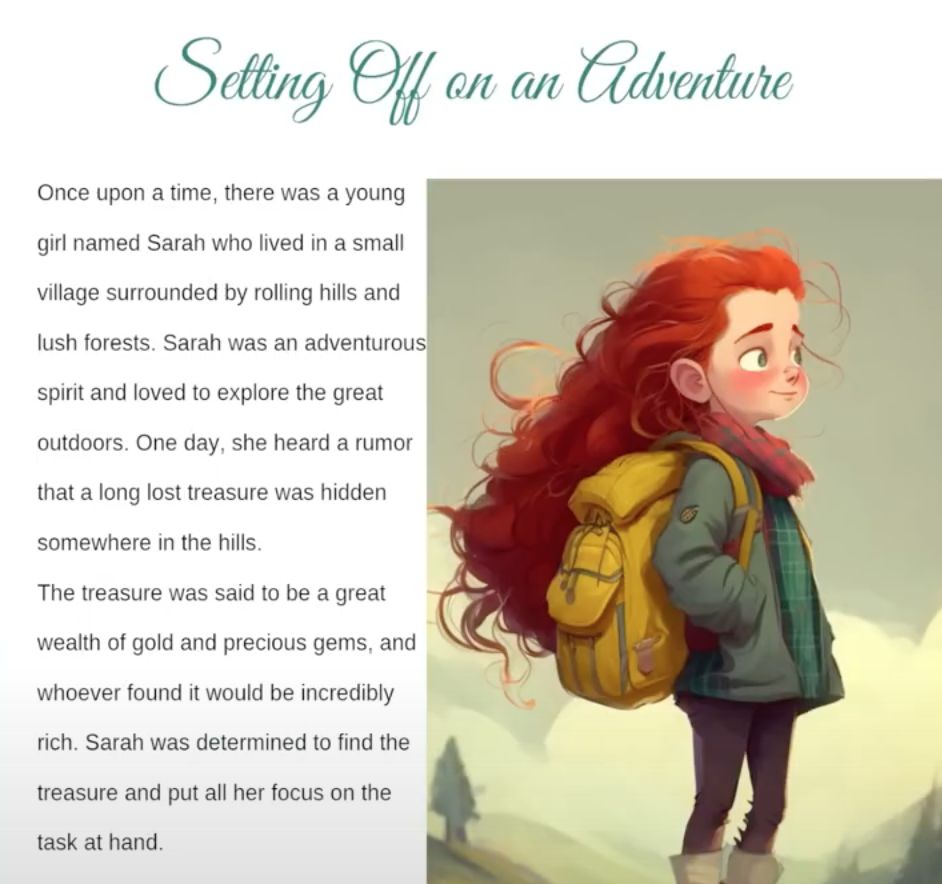

And it will generate images that you can use on different pages of your book, for instance, the one shown below:

Designing your book with Canva

Lastly, all you need to do is basic editing in Canva to bring the story and the images together to create a captivating storybook.

Upload the images generated from Midjourney to Canva and paste the text for each page onto it. Just like that, you have a book ready to go:

If you are interested in a more in-depth explanation of the non-AI parts of this workflow, you can view the entire video here.

Consistent characters in different settings with Midjourney

One of the problems with generative art to create books is that it can be hard to keep the style of images and characters consistent in a project. Using the same prompt twice will produce different. This means you can't always create a character and use it in different settings.

We’re going to explore a few rather tedious ways you can work towards visually consistency in AI-generated images.

Generating consistent characters with a seed

So this technique comes from Christian Heidorn, a content creator on YouTube who runs the channel Tokenized AI by Christian Heidorn.

Spoiler alert: there's no easy way to generate consistent characters. It involves multiple interactions with the same prompts and seed numbers over and over again until you start getting something that you like.

Here's the prompt that Christian used on Midjourney, and he ran it three times:

Prompt: /imagine Carla Caruso is a beautiful woman with braided bun hair and bright red hair, and she is wearing a leather jacket in gray color

Now, there's a reason why Christian used a name in this prompt. He claims that using a prompt helps Midjourney associate the facial features with a name, which can be called over and over to generate similar facial features - and he claims that this has been confirmed by Midjourney too.

After he got the results from his three repeated prompts, he picked out the image he liked the most and upscaled it by clicking on the corresponding "U2" button and then moved to the next important bit.

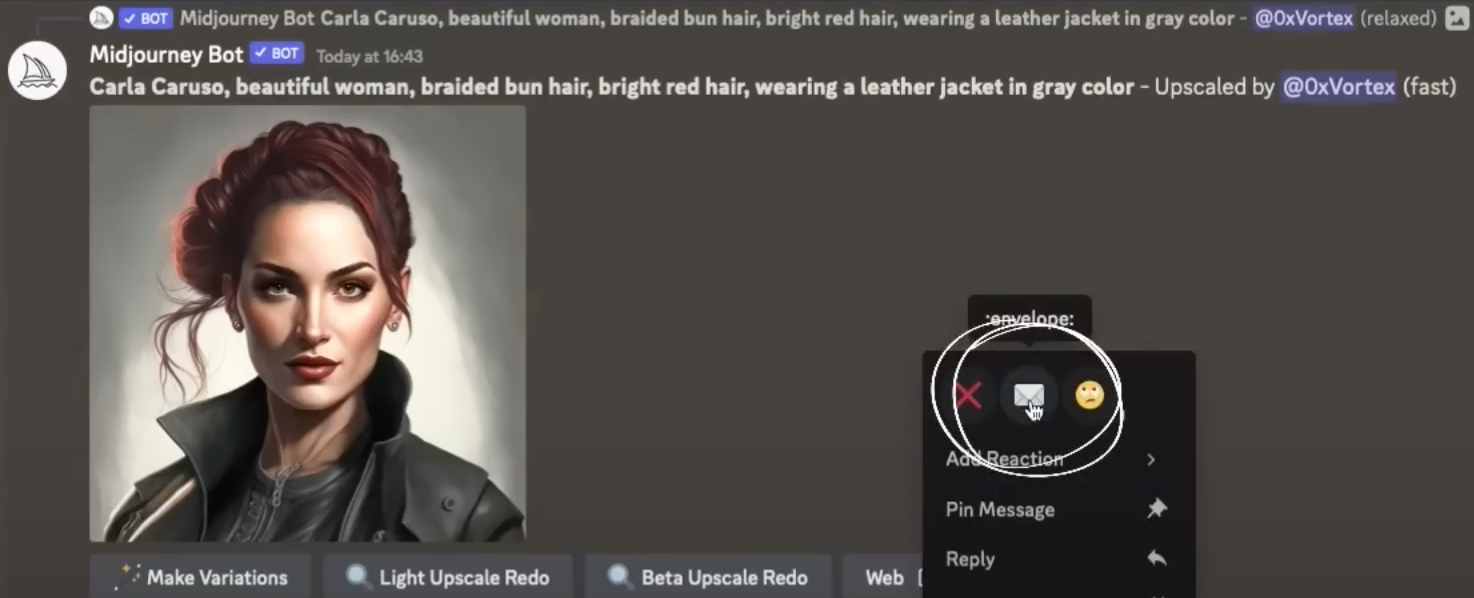

He right-clicked on the message sent by the Midjourney bot and reacted to it with the envelope icon (you can also do that using the react button on the right of the message):

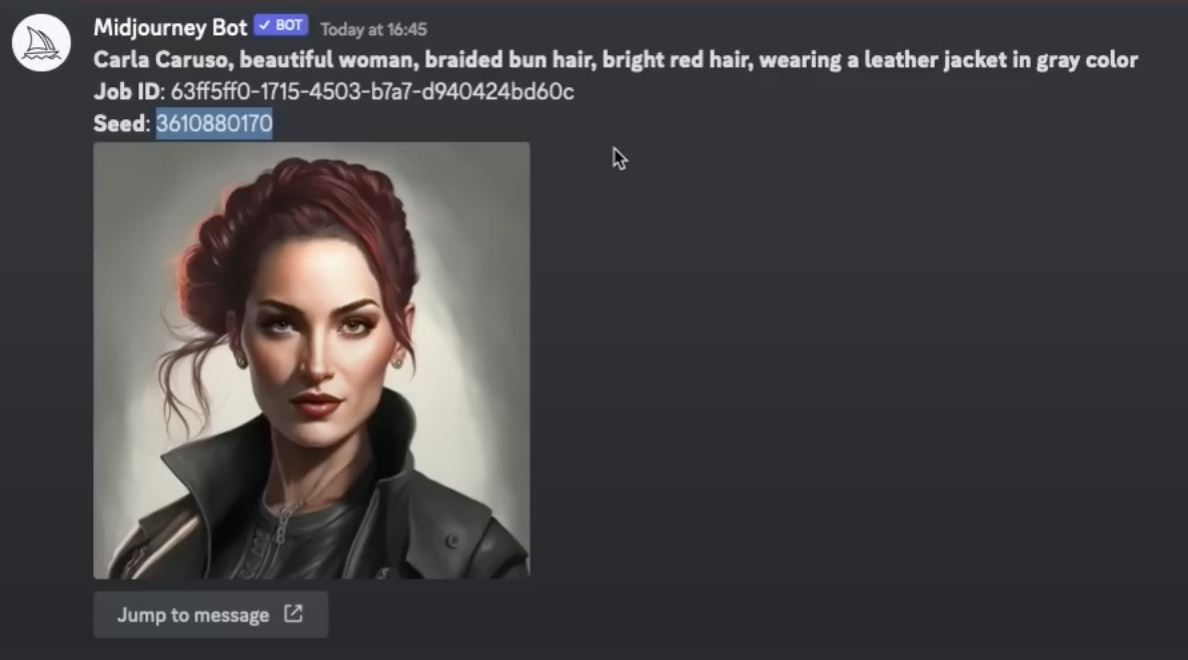

Reacting with the envelope icon causes the Midjourney bot to send you a private message with the seed number for the image:

Christian then used this seed number in his subsequent iterations to generate similar images using the same prompt with the addition of the seed number.

Prompt: /imagine Carla Caruso is a beautiful woman with braided bun hair and bright red hair, and she is wearing a leather jacket in gray color --seed 3610880170

When Midjourney came back with the result, Christian selected the image he liked, upscaled it, got its seed number, and prompted the same command, only changing the seed number; he ran 15 iterations of this:

Prompt: /imagine Carla Caruso is a beautiful woman with braided bun hair and bright red hair, and she is wearing a leather jacket in gray color --seed [seed number of every image you like after upscaling it]

And at the end of the 15 iterations, here's what the final image looked like:

But now Christian wanted to edit it in the style of The Matrix, and he used the following prompt:

Prompt: /imagine Carla Caruso is a beautiful woman with braided bun hair and bright red hair, and she is wearing a leather jacket in gray color, in the style of The Matrix --seed [seed number of every image you like after upscaling it]

He ran the command several times, tweaking different parameters, and this is what he got as one of the results. As you can see, it looks pretty similar, but it's not exactly the same.

However, you need to remember that generative AI models are always going to produce a little variation. If you are a skilled digital artist who wants to create identical characters, you can easily edit these pictures using a professional photo editing tool. I mean, it's certainly a far more convenient starting point than having to draw this character entirely from scratch.

But if you don't mind the little inconsistencies, Midjourney does a pretty good job.

Later in this article, I will also talk about another AI tool, Playground AI, that you can use to edit these pictures without knowing professional photo editing; it's a text-based AI photo generator and editor.

If you are interested in learning about Christian's entire workflow, you can view his video here.

Putting characters in different backgrounds with reference images

Now once you can create pretty consistent characters, you might want to start putting them in different settings. Doing that might seem like it requires a lot of iterations, and it probably does if you want it to look perfectly how you envision it.

However, Olivio Sarikas, a professional web and print designer with an MA in fine arts, has found a way to do it with a simple prompt.

On his YouTube channel, Olivio posted a video where he selected two images. One from which he would like to extract the character and the other from which he would like to take the background - which means that if you don't already have premade characters and backgrounds, you will need to generate them first.

Olivio copied the links from the two images and took the prompt of the image that he used for the background (the image on the right). He edited this prompt to fit his needs better, and here's what the final prompt looked like:

Prompt: /imagine [link of the first image] [link of the second image] wide shot, beautiful young fae queen on her throne, blonde hair, wearing a red and white uniform, delicate, detailed, elaborate, silver filigree, photorealistic, hd, soft light, --ar 2:3 --v 4 --q 2

And here is the result he got:

Now, this is obviously not perfect, and I believe that's the biggest limitation for Midjourney at this point.

You can't use these characters for professional work without putting in a heavy amount of editing, but you can't deny that these are great starting points. It would be much easier for an artist to edit this image than to conceive a character from scratch.

If you are interested in learning about Olivio's entire workflow, you can view his video here.

Limitations and possible improvements

Full body sheet - Now you may notice that if an artist wants to use these characters as a starting point, they can't see the full body. Well, according to a Reddit post, it turns out you can see the full body using a slight adjustment to the prompt by adding the phrase "full body reference sheet", and it will create images for you from all the different angles.

Prompt from another Reddit post: anime girl, gradient green hair, sweet, smiling, character sheet, full body reference sheet, character model, detailed, 8k --ar 1:1 --q 5 --v 4

Text-based photo editing - You can also use Playground AI to edit these pictures with text-based descriptions of the edits. It seems very powerful. For instance, I took the image below, covered it using the "mask" feature (red highlight in the picture below) on Playground AI's photo editor, and then used a simple prompt.

Prompt: Make the hair blonde, make the clothes black

As you can see, it worked. It did make the hair blonde and complied with my color preferences for the clothes, but it significantly reduced the image quality. Their paid plans allow you to create any resolutions of up to x1536 in any direction, height, or width.

AI art and the copyright controversy

We have talked about numerous ways that can help you in generating AI art. However, the fact remains that there are still some controversies surrounding AI art.

Aside from the whole debate about AI art vs. artists, there are some copyright issues that can become a hurdle, especially if you want to use AI tools to create copyright-protected art.

A classic case study is that of the Zarya of the Dawn, a graphic novel created using Midjourney by the artist Kristina Kashtanova.

The United States Copyrights Office (USCO) denied offering copyright protection to the images generated by Kristina.

The thing is that some of these images weren't entirely AI-generated. Kristina claims that she spent hours of work coming up with hundreds or thousands of prompts and then editing the pictures to make them look visually correct for her book.

However, the USCO maintained that images generated by Midjourney couldn't be offered copyright protection because there isn't significant human involvement in the process, as humans can't control it.

But it wasn't all a loss because the USCO allowed the artists to make their work copyrightable by adding more human elements to it. For instance, they copyrighted Kristina's texts and the order in which the selection of the images appeared.

So, while it wasn't a total loss for Kristina, it's clear that currently, any AI-generated artwork won't be offered copyright protection by the USCO, despite the fact that Kristina did put in the "human element" by editing her Midjourney-generated images.

Conclusion

AI-generated art is fascinating and magical in all the right ways, but it's still not as useful in many practical ways. You can't generate perfectly consistent 30-60 images with Midjourney and use them for a video.

And even if you were somehow able to manage that, your video's art wouldn't be copyright protected - at least for now.

Regardless, AI-generated art has really leveled the playing field, and once the copyright issues get sorted out, I am certain that it will help bring on a new age of entertainment.

Further reading

AI Tools for YouTube: Making Faceless YouTube Videos in Minutes