ChatGPT Prompt Cheat Sheet

This blog post proposes five questions that you can use to improve any GPT prompt significantly. The questions are:

Have you specified a persona for the model to emulate?

Have you provided a clear and unambiguous action for the model to take?

Have you listed out any requirements for the output?

Have you clearly explained the situation you are in and what you are trying to achieve with this task?

Where possible, have you provided three examples of what you are looking for?

The rest of this post will explain where these five questions came from, how to use them, and what to do when you start to run into the limits of what these five questions can do for you, and you need to get more out of your GPT.

P.A.R.S.E. - A Scorecard for Writing Better Prompts

Let’s start with an example: someone wants to use ChatGPT to write a blog post, and they come up with the following two-part prompt:

"I want to write a blog type content on a 10 or so point checklist that shows your UI/UX design agency needs a new positioning (April Dunford). Give me 10 different topics for this idea"

“I like this one: 10 Symptoms of Poor Positioning That Are Hurting Your UI/UX Design Agency. Now I want you to write the blog post for me. Pay particular emphasis on make this piece of content not easily intuited, new and worthwhile. Also add resonance through examples. But before that, Clarify back to what I'm asking you to do and I'll prompt you to continue"

There are a lot of good techniques here. Asking GPT to clarify if it understands the instruction is an excellent move. There is also much room for improvement, so let’s see how we can improve the prompts they used.

I combed through OpenAI’s best practices guide and their help docs, pulling out each recommendation as I went. Then, I went through a similar guide for Claude, a competing GPT model, which is arguably even more detailed and helpful than OpenAI’s.

I clustered all of the recommendations from both guides and then rephrased them into the following 16 Yes/No questions

Have you provided clear and unambiguous instructions to avoid leaving room for implicit assumptions?

Have you asked the model to adopt a specific persona?

Have you explained to GPT why you want the task done?

Have you used Delimiters like ### or """ or XML tags to mark different parts of the prompt?

Are you starting your answer in the way that you would like GPT to continue?

If you're providing a long document, have you placed the instructions after the document?

Have you described and instructed GPT on how to handle any difficult or unusual cases it may encounter in your input?

Where possible, have you remembered to add at least three examples to your prompt?

Have you asked GPT to evaluate its own outputs?

Have you instructed GPT on what to do if it doesn't find the required information in a given text (avoiding hallucinations)?

Have you checked if GPT has understood your instructions by asking it?

If GPT's response is close but not exact, have you asked GPT to rewrite it?

Have you provided reference text and used direct citations when necessary?

Have you asked the model if it missed anything on previous passes?

If using ChatGPT, Have you placed the instructions at the beginning of the prompt and if using Claude, have you placed the most important instructions at the end of the prompt?

I asked ChatGPT to evaluate the original prompt against all of the questions and provide suggestions for how the prompt could be improved. This is what it came up with:

"I want to write a blog-type content on a 10-point checklist that shows your UI/UX design agency needs a new positioning, based on the concepts of April Dunford. I'm aiming for a clear, concise, and insightful piece that highlights the key signs that an agency might need to reassess its positioning.

Give me 10 different topics that could fit within this idea. Each topic should be a potential sign or issue that suggests a need for new positioning. These topics will form the basis of my checklist.

For example, one topic might be 'Your agency's value proposition is unclear to potential clients.' Another could be 'Your current positioning is not differentiated from competitors.' A third might be 'Your agency's positioning does not align with its actual strengths and capabilities.'

If you encounter a topic that is difficult to define or explain, provide a brief explanation and suggest how it could be expanded upon in the blog post. If you can't find enough information or struggle with a topic, let me know and move on to the next one.

Once you've generated the list, review your outputs and make sure they are clear, accurate, and relevant to the prompt. If they don't quite hit the mark, try to rewrite them for better clarity or relevance.

I haven't provided a reference text for this task, but feel free to draw upon general knowledge of UI/UX design and agency positioning. Please remember to stay within the scope of the task.

Lastly, can you confirm that you've understood the task and are ready to proceed?"

And that’s just the first part of the two-part prompt!

This is clearly overkill.

The original prompt was good enough. Investing this kind of effort to optimize a relatively straightforward prompt like this is just a waste of time. Not to mention a waste of tokens. Applying this kind of optimization to a team’s worth of prompts, day-in, day-out for months, and the costs would rack up quickly.

Part of the problem here is that not all optimization factors have the same weight. For example, telling GPT to pretend to be an expert can have a massive impact on the quality of the response. On the other hand, using delimiters might help reduce confusion in a long prompt, but it won’t give you the same qualitative bump in output quality.

A more practical solution would be some shorthand for the highest impact factors. Rob Lennon has developed the hugely popular acronym ASPECCT which is perfect for this job.

ASPECCT stands for including each of the following components in a prompt

An Action to take

Steps to perform the action

A Persona to emulate

Examples of inputs and/or outputs

Context about the action and situation

Constraints and what not to do

A Template or desired format for the output

This is a fantastic framework to think through when conceptualizing a prompt. I have used it extensively and gotten lots of mileage out of it.

My only problem with this approach is that it breaks down when applied to multi-step workflows. I have found GPTs most helpful when you run through a sequence of individual prompts. The S in ASPECCT implies that you list steps to run through in one large prompt. I disagree with the premise behind mega-prompts. I prefer building out sequences of smaller, more specialized prompts.

What I’m looking for is a version of ASPECCT that you can apply to each prompt in sequence.

I’ve settled on the following five questions:

Have you specified a persona for the model to emulate?

Have you provided a clear and unambiguous action for the model to take?

Have you listed out any requirements for the output?

Have you clearly explained the situation you are in and what you are trying to achieve with this task?

When possible, have you provided three examples of what you are looking for?

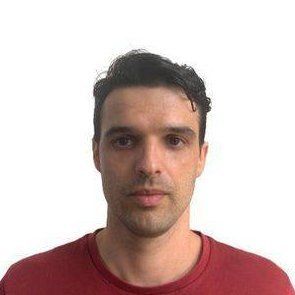

I used these questions to evaluate the original prompt and got the following improvements in the result:

Please evaluate the following prompt against the following yes now questions. Assign 2 points for yes and provide suggestions for how the prompt could be improved when the answer is no.

Please evaluate the following prompt against the following questions. Assign 0 points to a question if it’s a No, 1 point if it’s a YES but could be improved, and 2 points if it’s a clear YES. Please make suggestions for improvements where you can. Then tally up my score at the end and express the total as a percentage. The final output should be a table, with all of the suggestions listed at the bottom.

###

Prompt:

"I want to write a blog type content on a 10 or so point checklist that shows your UI/UX design agency needs a new positioning (April Dunford). Give me 10 different topics for this idea"

###

Questions:

Have you specified a persona for the model to emulate?Have you provided a clear and unambiguous action for the model to take?Have you listed out any requirements for the output?Have you clearly explained the situation you are in and what you are trying to achieve with this task?When possible, have you provided 3 examples of what you are looking for?

This a practical scorecard for the highest impact factors that you can use to evaluate any prompt and tweak it accordingly.

The response at the end of the result from the meta-prompt above is a massive improvement over the original, but I think it’s important to understand the rationale behind each question so that you don’t have to rely on a meta-prompt the next time you want to PARSE your own prompts.

Have you specified a persona for the model to emulate?

For some reason, asking GPT to pretend to be an expert often yields significantly better results than just asking your question directly. Instead of asking it to be a generic expert, you can specify the expertise best suited for the job. For example, “Act as an experienced playwright,...” or “Pretend to be a marketing expert with extensive experience in the social media space,...”. This is not a silver bullet, but the effort-to-reward ratio on this is so low that it doesn’t make sense not to get your GPT to assume the ideal role before answering.

Have you provided a clear and unambiguous action for the model to take?

Sometimes, people write long-winded train-of-thought style prompts that don’t have an explicit request or end up having multiple overlapping requests. GPTs will always perform better when each prompt is worded as a single, focused task. If you find yourself writing a long-winded prompt, that’s fine. Make sure you pull out the specific action you want it to perform and add it to the beginning of your prompt. ChatGPT performs better when the task comes at the beginning of the prompt (refer to point #2). On the other hand, Claude performs better when the action comes at the end of the prompt (refer to point #6).

Have you listed out any requirements for the output?

“GPTs can’t read your mind. If outputs are too long, ask for brief replies. If outputs are too simple, ask for expert-level writing. If you dislike the format, demonstrate the format you’d like to see. The less GPTs have to guess what you want, the more likely you’ll get it.” This is the opening paragraph from the first of Open AI’s Six strategies for getting better results from GPT. If you are not sure what to specify, then start with the type of output, its length, format, and style. The documentation also encourages you to list these requirements as bullet points and to phrase them in the affirmative. This is why they are called requirements and not constraints. “End every sentence with a question mark or full stop” is a clearer requirement than “Don’t use exclamation marks” because the latter has the phrase “use exclamation marks” embedded in it.

Have you clearly explained the situation you are in and what you are trying to achieve with this task?

The more helpful context you give a prompt, the easier it is for a GPT to produce what you want. A good framework for considering what context to provide is to imagine GPT as an intelligent intern. You want to tell the intern why they are doing the task so they can figure out all of the implicit expectations built into the task that haven’t been explicitly listed in the action or requirements section.

Where possible, have you provided three examples of what you are looking for?

We’ve truly saved the best for last here because giving GPt examples means you can ignore all the other instructions. If you have a few examples of what you want, GPt will often discern what you want more precisely than you can describe the task. Giving GPT examples is the most effective and overlooked way to get more out of your prompts. The only problem is that in most situations, you won’t have an example. If you did, then you wouldn’t need to use ChatGPT. So, when you have an example of the output you want, always include it. Apparently, three examples are the ideal number.

These five questions are far from perfect, but I will never remember all 15 questions from the best practices guides. Additionally, the primary word in each question spells out PARSE, which is easy to remember and appropriate for the context.

What to do when you get mediocre results

Generative AI tools like ChatGPT and Claude break down when tasks get complex. There's a technique called "prompt chaining", where you break a big task into smaller tasks that can be more effective than giving the AI one big task to do all at once.

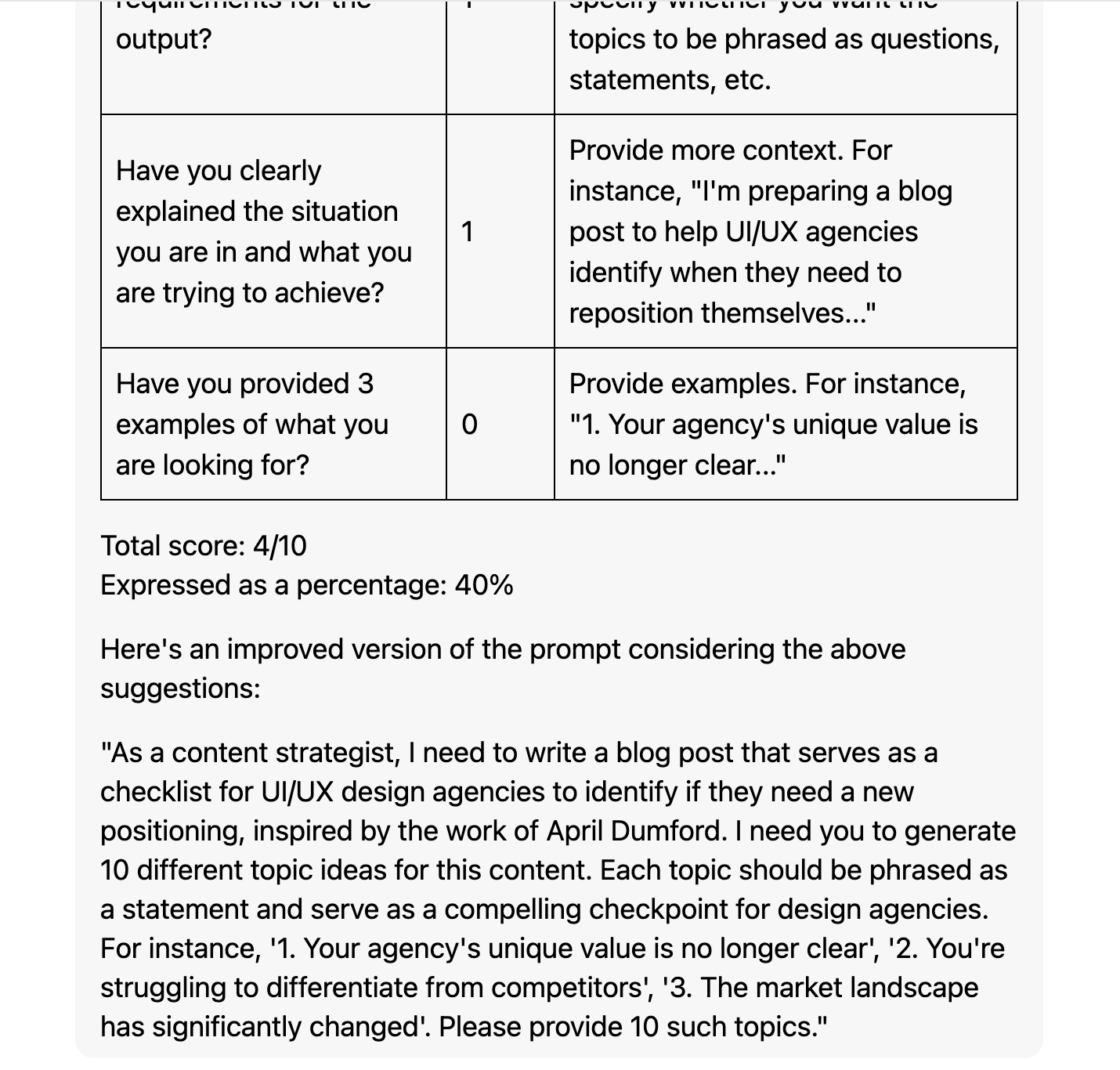

A great example of this comes from the official guide for the AI system, Claude, created by Anthropic. The example they use is getting the GPT to answer a question from a document.

First, they ask GPT to find quotes in the document that relate to the question.

Then, they feed these quotes into the next prompt and ask GPT to answer the question.

This is a relatively complex task, and breaking it into two focused steps lets GPT handle it more effectively than it would with a single prompt.

If you're not getting the desired result, consider breaking your task down into smaller, more focused prompts. Giving GPT this chain of prompts, one at a time, can make GPT more effective.

Prompt chaining can be overkill in a lot of situations

Prompt chaining is not a catch-all solution and should not be your first resort when facing a complex task.

Think step-by-step

For tasks that are moderately complicated, a simpler approach can often be just adding 'Think step-by-step' at the end of your prompt or as a follow-up to a response. This simple tweak can guide the AI to process the task more methodically.

Adding numbered steps to your prompt

For tasks that are a bit more complex, you can format your instructions as a numbered list. This helps the AI follow the steps more accurately. But beware, if the process becomes too long, like a 32-step checklist, the AI will get confused.

Always start with the simplest solution. It's usually faster, cheaper, and more effective. If a straightforward prompt doesn't work, then consider a step-by-step approach or a numbered list. The limits here will vary from task to task, and it's not always clear where that line is. When you feel like you're pushing the boundaries of what your GPT can handle, that's when prompt chaining becomes useful.

An example of a Prompt Chain

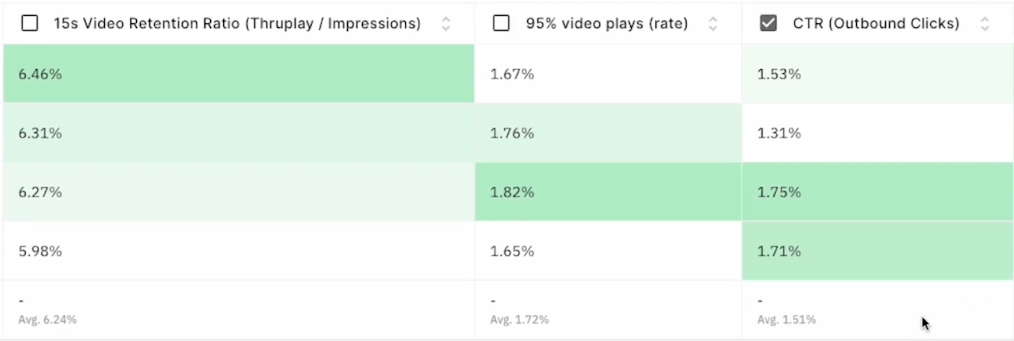

Mo Junayed, who helps run a company called Groth Digital, found a smart way to use ChatGPT to create ads for Facebook (now called Meta).

He came up with a list of nine questions or "prompts” to write a great ad:

Prompt 1: what are some of the problems [products you are trying to sell] solve

Prompt 2: Elaborate on problem [relevant number from the list of problems]

Prompt 3: What would be an ideal customer avatar that would purchase the [product] to solve the above problem

Prompt 4: Create an ideal customer avatar based on the information above

Prompt 5: Consider yourself an advanced marketer who has been advertising for decades, recreate the ideal customer avatar considering the information that we are trying to sell to [insert target audience]

Prompt 6: Write a sales letter to [avatar] using principles from breakthrough advertising and principles from David Ogilvy on copywriting

Prompt 7: Turn this sales letter into an ad script that would work on TikTok and uses millennial language

Prompt 8: Rewrite the ad script using the problem, agitate, solution framework, a script that is enough for a 45-second video and make it more persuasive

Prompt 9: Rewrite the ad script using the problem agitate solution framework, keep in mind that the person is frustrated and has used multiple products and [alternative solutions] and has found [product you are trying to sell] to be the best solution because the results are instant

What's amazing is how fast and effective this method is. Junayed was able to make a full script for an ad in about 5 minutes. For this particular ad, he ran four different variations and found that the click-through rate (CTR) was 1.51%. Mo claims that the CTR is slightly above average, which shows that the ad is effective.

Prompt chains can help you use AI to do complicated, nuanced tasks provided you know what you are doing and have a method or multi-step approach for completing the task. Human expertise and input are essential components to building an effective prompt chain. Prompt chains have to be based on personal experience; you can just as easily build a process out of someone else’s experience, or from a book, a course, or a workshop. The key takeaway is that we are not automating anything here, we are supervising GPT to augment real-world human expertise.

The trade-off is that prompt chains require expertise, are harder to construct, take longer to execute, and can be more expensive.

Using GPT to suggest a process

If you don’t have any real-world experience and are unsure how to approach a complex task, you can ask GPT to suggest a process for you as a helpful starting point.

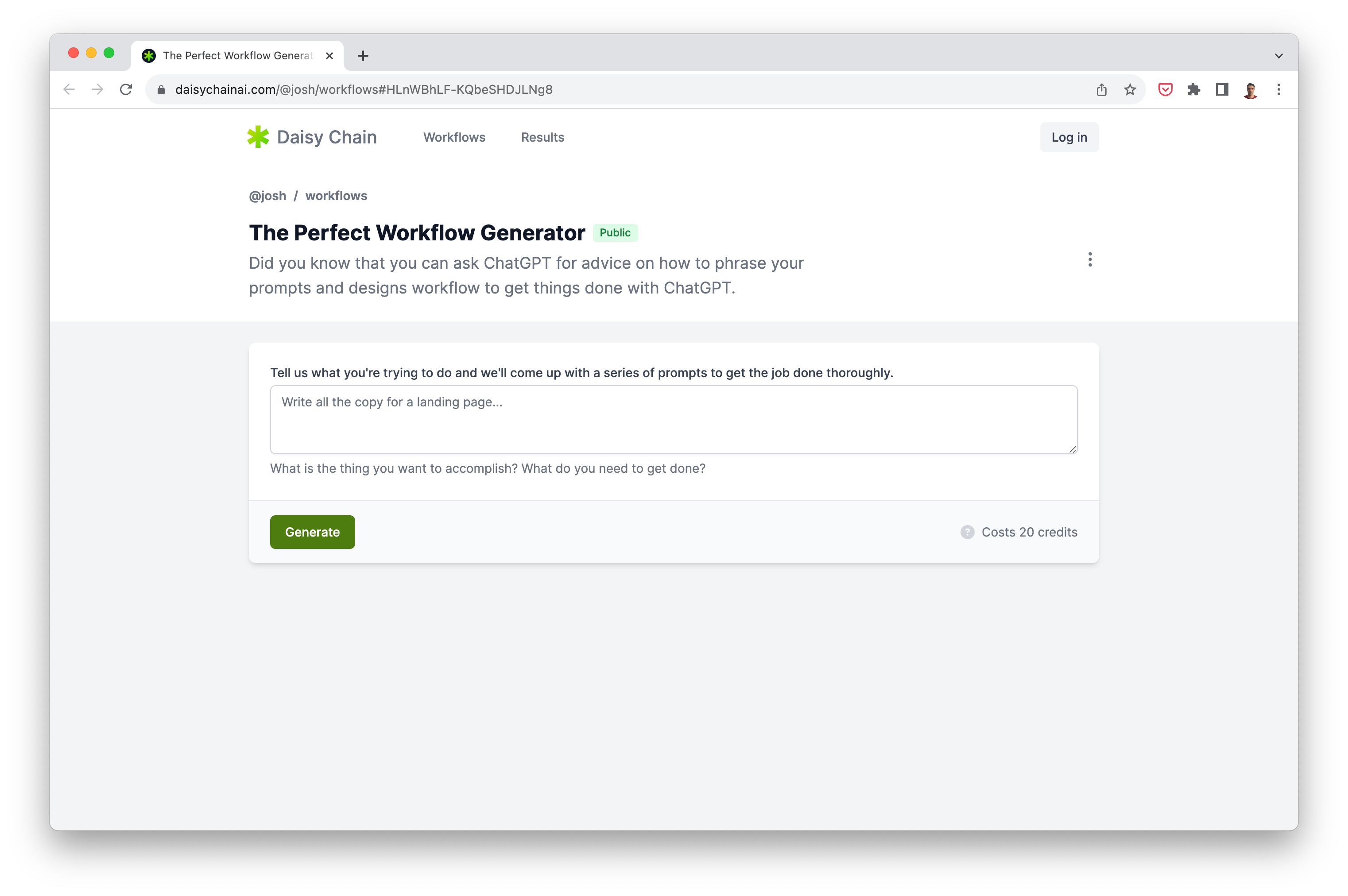

Take, for example, The Perfect Workflow Generator

This tool leverages the power of ChatGPT to generate a series of prompts based on what you're trying to accomplish. You simply input the task you need to get done, and the tool will generate a workflow for you. It's like having a personal assistant helping you break down a complex task into manageable chunks.

However, it's essential to remember that it's only a starting point. A foundation that you can use to get started and then build upon as you develop experience completing the tasks. Over time, you can refine the process, making it more efficient and tailored specifically to how you work.

In conclusion, the power of tools like ChatGPT lies not in outright automation but in their ability to augment human capabilities. By using methods like prompt chaining, we can break down complex tasks into manageable chunks and guide the AI in a step-by-step manner, yielding significantly better results than you would get from a single prompt. AI models can suggest a process or a series of prompts to accomplish a task, but the effectiveness of these suggestions is often improved by human expertise and refinement. GPTs can provide a springboard from which you can develop your own unique workflows.