AI in Life Science: Designing an Experiment with ChatGPT

With the increasing availability and advancements in artificial intelligence technology, it's no surprise that researchers are exploring how AI can enhance their experiments.

AI is used in life science to help researchers generate hypotheses, design experiments, write R scripts for data analysis, and to suggest statistical tests to compare datasets. While AI can be helpful, it cannot replace human expertise in scientific research.

Let's look at a real-world example of a scientist using artificial intelligence in her life sciences research.

Dr. Alana Rister wanted to try using AI to design an entire experiment.

She started by asking ChatGPT about research gaps in a broad area of study. It gave her a list of research questions that she could work on, and she decided to try one.

Prompt 1: Can you write a hypothesis for this research question? [question]

And ChatGPT came up with a hypothesis, but we also needed to test our hypotheses, so Dr. Rister asked how can we do that.

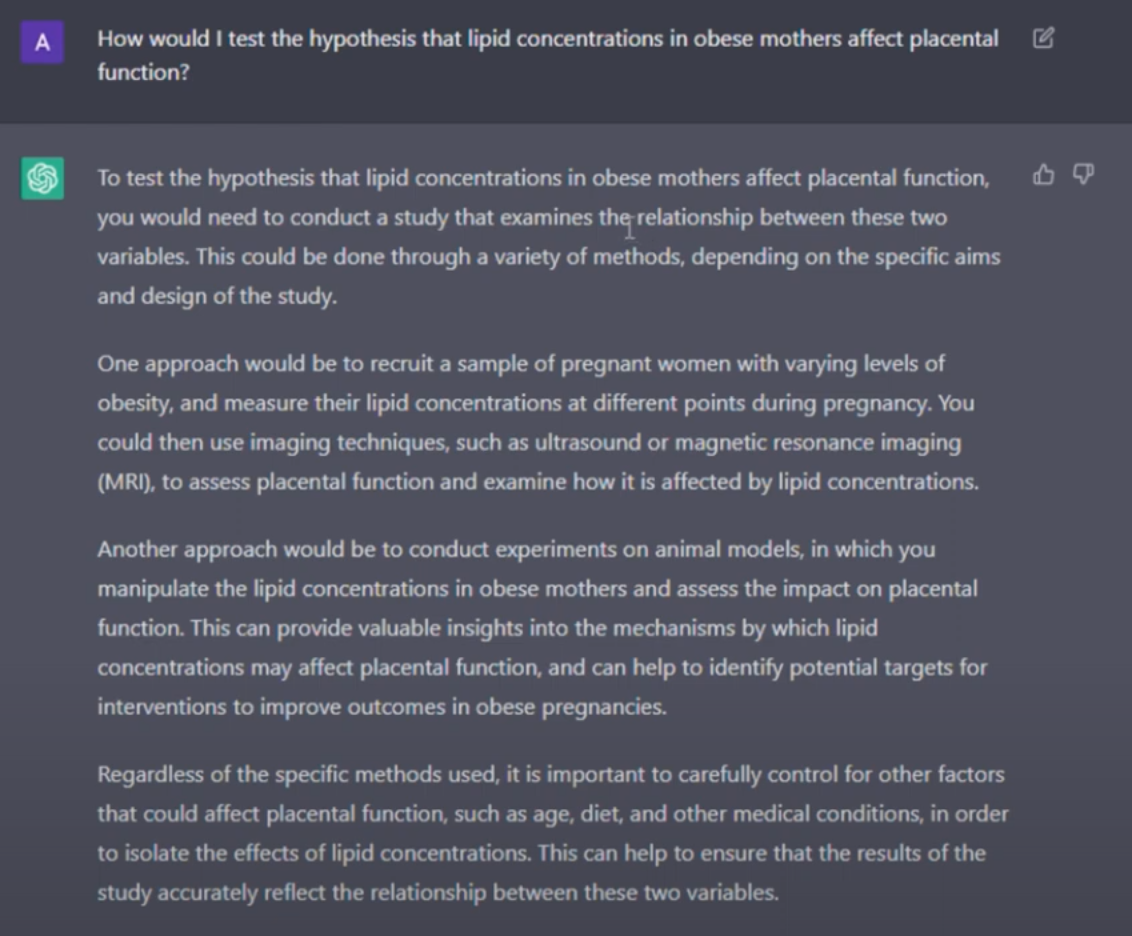

Prompt 2: How would I test this hypothesis that lipid concentrations in obese mothers affect placental function?

Now, ChatGPT started designing an entire experiment with control and test subjects, which was impressive. Typically, scientists have to think about experimental design very carefully. But this tool gave us a valid study design with just a few prompts.

Her following prompt was very specific. It laid out a specific set of instructions for an R script to analyze the data we could collect from this experiment.

Prompt 3: Can you write an R code to create a grouped bar chart with the mean weight on the y-axis, sex on the x-axis, and fill as treatment. I would also like error bars with the standard error of the mean of the weight?

And now for the most exciting prompt. Dr. Rister asked ChatGPT what statistical test to use to compare two datasets.

Prompt 4: I have 3 treatment groups and 25 lipid concentrations for each sample in a treatment group. How should I compare these groups to determine if the lipid concentrations are significantly higher in one group compared to the other?

ChatGPT told her that she should use ANOVA to find the p-value for her observations. This is very exciting because life scientists are usually not adept statisticians and often struggle with understanding how to use different tests correctly and interpret their results. But with this tool, they can ask it what test to use, and it will give them the right one - it's akin to turning to your lab mate and asking them how to test your hypothesis best.

Dr. Rister also got ChatGPT to write an introductory paragraph for her project. But using ChatGPT for writing papers has been widely banned by scientific publishing powerhouses. This happened after a paper came out with ChatGPT as a co-author. This led to a huge debate within the scientific community on what the future of publishing would look like, but before the fire could spread too, scientific publishing giants like Elsevier quickly ruled out the possibility of crediting AI tools as authors on a manuscript, deeming the act equal to plagiarism.

While we don’t know if AI will be removed from the coauthorship blacklist anytime soon, it’s an exciting time for researchers. They can now ask different AI tools to help them design their experiments. Still, they need to use it with caution because ChatGPT has gained notoriety within the research community for “hallucinating” papers and DOIs that do not exist.

But, if used cautiously, it seems like ChatGPT can do more good than harm. However, in its current state, researchers can’t rely entirely on it without knowing pretty much everything themselves. As of right now, ChatGPT is more like a lab mate that can help you validate your ideas or tell you that you might need to read up some more, but it can’t do a scientist’s job any more than it can write program scripts that need modifications to make them functional.

If you want to see Dr. Rister's entire flow, you can look at her YouTube video here.