Using AI For Political Gains: The Pros and Cons of AI in Politics

Since AI has gotten rather popular lately, it's no surprise that people are finding different uses for it in politics. Some of these use cases are a bit more morally gray than others, but it's interesting to see the extent of AI's penetrance in our world.

You can use AI to help your advocacy campaigns by generating the content for your campaign and brainstorming different ways to make it work. You can also use it to do some research, but it's not really the most effective way to use AI due to its lack of reliability and dataset limitations.

Let's take a look at how people are using AI for different political campaigns.

Using AI for animal advocacy

There are several prompts that can help accelerate the different parts of a campaign that's championing better animal rights. Let's talk about a few cases and specific prompts that you can use.

Helping animal advocacy work

Prompt: Write an email to subscribers of a newsletter about the potential of using ChatGPT for animal advocacy work, including a number of use cases as examples.

And ChatGPT produces this newsletter:

Now this is a pretty great newsletter, but there are ways to make it even better, and you can easily do that using just a few more prompts in ChatGPT. The mastermind behind these creative newsletter prompts is Jens Lennartsson, who runs a newsletter marketing company called The Marketing Mule.

Brainstorming ways that can be used to undermine a campaign

While AI can be used to help in augmenting different campaigns, it can also be used to bring them down. However, you can use it to think about different ways that people can use it to dismantle your campaign and find a workaround.

Prompt: How could ChatGPT be harmful to animal advocacy work or be used against it?

And ChatGPT gives you several ways that your campaign can be undermined by people in your opposition:

In fact, you can even do a premortem to see how your campaign might fail and then devise ways to overcome that. Here's how you can run a premortem using AI for a project.

Writing Tweets using ChatGPT

Prompt: Write a tweet about ending factory farming that a Republican living in Georgia would agree with.

This is pretty straightforward; ChatGPT gives you a simple 180-character Tweet that you can easily paste on your campaign’s Twitter account:

A great way to make it even more powerful is to generate multiple Tweets, and schedule them to be posted on your account automatically using a tool like the Chirr App.

The mind behind all of these prompts is Steven Rouk, the founder of Connect For Animals, a platform that seeks to put an end to factory farming. He's deeply involved in animal advocacy, contributing his expertise in data analysis, strategy, community building, and grassroots activism. When he's not immersed in his mission, Steven enjoys exploring topics like philosophy and ethics through his writing. He also finds solace in reading nonfiction and maintaining an active lifestyle with his love for running.

These prompts can be great for accelerating content generation, but they also have a few pitfalls. As with most things written or created by ChatGPT, you will need to thoroughly proofread everything. Moreover, you need to ensure that the content matches your tone. Fortunately, you can easily do that by modifying your prompt with a short phrase: write it in a way [insert tone]. For instance, "Please write a newsletter about the benefits of veganism in the tone of the Gen Z audience."

If you want to learn more about Steven's workflow, you can read the whole piece here.

Using AI for targeted political campaigns

In the midst of political campaigns and elections, a powerful technology known as artificial intelligence (AI) emerged. However, its utilization raised ethical concerns and posed a threat to democracy.

During the 2016 US presidential election, evidence surfaced suggesting that AI-powered technologies were employed to manipulate citizens. Cambridge Analytica, a data science firm, implemented an extensive advertising campaign that targeted susceptible voters based on their individual psychology. This operation involved the use of big data and machine learning to influence people's emotions. Voters received personalized messages tailored to their susceptibilities, with some receiving fear-based ads and others receiving ads appealing to tradition and community.

The success of this micro-targeting operation relied on the availability of real-time data from voters, including their online behavior, consumption patterns, and relationships. These data points were used to construct unique profiles of voter behavior and psychology.

The issue with this approach was not the technology itself but rather the covert nature of the campaigning and the insincerity of the political messages being disseminated. Candidates like Donald Trump, with flexible campaign promises, were particularly well-suited to this tactic. Each voter received a tailored message that emphasized a different aspect of a particular argument, effectively presenting a different version of the candidate to each person. The key was finding the right emotional triggers to incite action.

AI's manipulative potential was not limited to the US. In the UK, it remained unclear what role AI played in the campaigning leading up to the Brexit referendum. Additionally, political bots, autonomous accounts programmed to spread one-sided political messages, were utilized during the 2016 US presidential election and various other key political elections worldwide. These bots spread misinformation and fake news on social media platforms, shaping public discourse and distorting political sentiment. They even infiltrated hashtags and pages used by opposing candidates to spread automated content.

While it was easy to blame AI technology for these manipulations and their influence on election outcomes, the underlying technology itself was not inherently harmful. The algorithms used to mislead and confuse could be repurposed to support democracy.

Ethical and legitimate uses of AI in politics were possible. For instance, political bots could be programmed to intervene when people share articles containing known misinformation, issuing warnings and explanations to debunk falsehoods. AI could also be employed to listen to people's opinions and ensure their voices were heard by elected representatives. Micro-targeting campaigns could be deployed based on insights gathered to educate voters on political issues and help them form their own informed opinions.

Moreover, you can also use ChatGPT to help you with some of your research - but it's a bit rough around the edges on that. And there's the fact that its dataset is limited to 2021. Therefore, ChatGPT doesn't know the things that happened after 2021. I will talk more about it in the next section.

The overwhelming amount of political information presented in TV debates and newspapers could be tackled with AI, allowing individuals to discover the political positions of candidates based on their specific interests. Personalized political ads should serve the voters by providing information rather than undermining their interests.

The use of AI in politics was not a passing trend but a valuable tool for politicians and their campaigns. However, it was crucial for them to commit to ethical and judicious usage of AI, ensuring that their attempts to sway voters did not undermine democracy.

You can read more about this story here.

Leveraging generative AI for political research

Using AI - especially ChatGPT - for research isn't a great idea just yet, because it may not come up with verified information. In fact, it may even "hallucinate" some of the information that it gives you. However, you can still use it to get a headstart if that helps you.

Generating a summary of current information on a topic

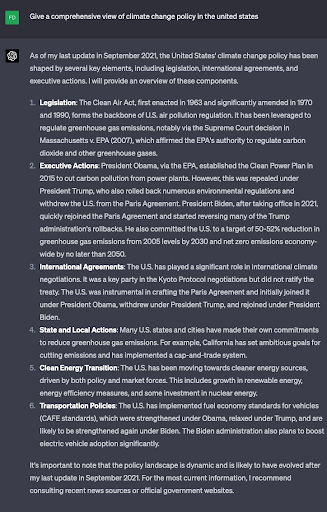

Prompt: Give a comprehensive overview of the current state of climate change policy in the United states.

And ChatGPT would produce something like this:

Figuring out someone's stance on a particular issue

Prompt: Summarize Senator Chuck Schumer's voting record and public stance on climate change up until September 2021

Now, the drawback here is that even if you use ChatGPT to get someone's opinion on a particular thing, you will still need to thoroughly verify their actual comments. It's a good place to start, but it's not really reliable. With further advancements, AI might become more reliable in research, but the current landscape advises caution.

You can read more about these prompts here.

Conclusion

To summarize, AI can be used in different ways for political campaigns. It's most helpful (and ethical) to use cases or brainstorm ways to make your campaign work or find the weak areas where it can fail so that you can fortify them.

You can also use people's data, like their personality types, their preferences, and more, to use AI and tailor your campaign's outreach specifically to individual supporters’ preferences.

While AI is still evolving, it’s rather powerful and is already being used in politics. However, it raises a lot of questions about the morality and the permissibility of the use of AI in politics, in the name of advancements in technology. Hopefully, as AI becomes more mainstream, we can figure out some ways to regulate the use of AI in politics ethically, instead of letting it run wild and manipulate the masses through sophisticated algorithms.